[ad_1]

Elon Musk pushed to make use of Tesla’s inside driver monitoring digital camera to report video of drivers’ habits, primarily for Tesla to make use of this video as proof to defend itself from investigations within the occasion of a crash, in line with Walter Isaacson’s new biography of the Tesla CEO.

Walter Isaacson’s biography of Elon Musk is out, leading to a number of revelations about Tesla’s previous, current, and future. One in every of these revelations is a possible use for the Tesla inside driver monitoring digital camera that’s included on present Teslas.

Many vehicles have a digital camera like this to observe driver attentiveness and warn a driver in the event that they appear to be paying too little consideration to the street, although different automakers usually use infrared cameras and the info by no means leaves the automotive.

Teslas have had these cameras for a few years, first displaying up on the Mannequin 3 in 2017 and afterward the S/X, however they weren’t activated till 2021. Earlier than that, Tesla decided consideration by detecting steering wheel torque (a security that was fairly simple to defeat).

These days, the digital camera is used to make sure that drivers are nonetheless watching the street whereas Autopilot or FSD are activated, as each programs are “Stage 2” self-driving programs and thus require driver consideration. The hope, although, was to doubtlessly use the digital camera for cabin monitoring if Tesla’s robotaxi dream is ever realized.

However that wasn’t the one factor Tesla needed to make use of the cameras for. In keeping with the biography, Musk pushed internally to make use of the digital camera to report clips of Tesla drivers, initially with out their data, with the aim of utilizing this footage to defend the corporate within the occasion of investigations into the habits of its Autopilot system.

Musk was satisfied that unhealthy drivers quite than unhealthy software program have been the principle cause for many of the accidents. At one assembly, he advised utilizing knowledge collected from the automotive’s cameras—one in all which is contained in the automotive and targeted on the motive force—to show when there was driver error. One of many girls on the desk pushed again. “We went backwards and forwards with the privateness workforce about that,” she stated. “We can not affiliate the selfie streams to a selected automobile, even when there’s a crash, or no less than that’s the steering from our attorneys.”

– Walter Isaacson, Elon Musk

The primary level right here is attention-grabbing as a result of there are certainly numerous unhealthy drivers who misuse Autopilot and are definitely accountable for what occurs whereas it’s activated.

As talked about above, Autopilot and FSD are “Stage 2” programs. There are six ranges of self-driving – 0 by 5 – and ranges 0-2 require energetic driving always, whereas with ranges 3+, the motive force can flip their consideration away from the street in sure circumstances. However regardless of Tesla’s insistence that drivers nonetheless concentrate, a examine has proven that driver consideration does lower with the system activated.

We’ve got seen many examples of Tesla drivers behaving badly with Autopilot activated, although these egregious examples aren’t solely the difficulty right here. There have been many well-publicized Tesla crashes, and within the quick aftermath of an incident, rumors usually swirl about whether or not Autopilot was activated. No matter whether or not there’s any cause to imagine that it was activated, media stories or social media will usually give attention to Autopilot, resulting in an usually unfair public notion that there’s a connection between Autopilot and crashing.

However in lots of of those circumstances, Autopilot ultimately will get exonerated when the incident is investigated by authorities. Oftentimes, it’s a easy matter of the motive force not utilizing the system correctly or counting on it the place they need to not. These exonerations usually embrace investigations the place automobile logs are pulled to indicate whether or not Autopilot was activated, how usually it needed to remind the motive force to concentrate, what velocity the automotive was driving, and so forth. Cameras may add one other knowledge level to these investigations.

Even when crashes occur resulting from human error, this might nonetheless be a problem for Tesla as a result of human error is commonly a design difficulty. The system could possibly be designed or marketed to raised remind drivers of their duty (specifically, don’t name it “full self-driving” if it doesn’t drive itself, maybe?), or extra safeguards could possibly be added to make sure driver consideration.

The NHTSA is presently probing Tesla’s Autopilot system, and it seems to be like safeguards are what they’ll give attention to – they’ll probably pressure adjustments to the way in which Tesla displays drivers for security functions.

However then Musk goes on to recommend that not solely are these accidents typically the fault of the drivers, however that he needs cabin cameras for use to spy on drivers, with the precise objective of eager to win lawsuits or investigations introduced towards Tesla (such because the NHTSA probe). To not improve security, to not acquire knowledge to enhance the system, however to guard Tesla and his ego – to win.

Along with this adversarial stance towards his clients, the passage means that his preliminary thought was to gather this information with out informing the motive force, with the concept of including an information privateness pop-up solely coming later within the dialogue.

Musk was not blissful. The idea of “privateness groups” didn’t heat his coronary heart. “I’m the decision-maker at this firm, not the privateness workforce,” he stated. “I don’t even know who they’re. They’re so non-public you by no means know who they’re.” There have been some nervous laughs. “Maybe we are able to have a pop-up the place we inform folks that in the event that they use FSD [Full Self-Driving], we’ll acquire knowledge within the occasion of a crash,” he advised. “Would that be okay?”

The girl considered it for a second, then nodded. “So long as we’re speaking it to clients, I believe we’re okay with that.”

-WALTER ISAACSON, ELON MUSK

Right here, it’s notable that Musk says he’s the decision-maker and that he doesn’t even know who the privateness workforce is.

In recent times and months, Musk has appeared more and more distracted in his administration of Tesla, not too long ago focusing way more on Twitter than on the corporate that has catapulted him to the highest of the checklist of the world’s richest folks.

It is likely to be good for him to have some thought of who the folks working beneath him are, particularly the privateness workforce, for an organization that has energetic cameras operating on the street, and in folks’s vehicles and garages, all around the globe, on a regular basis – notably when Tesla is presently going through a category motion lawsuit over video privateness.

In April, it was revealed that Tesla staff shared movies recorded inside homeowners’ garages, together with movies of people that have been unclothed and ones the place some personally identifiable info was connected. And in Illinois, a separate class motion lawsuit focuses on the cabin digital camera particularly.

Whereas Tesla does have a devoted web page describing its knowledge privateness strategy, a new impartial evaluation launched final week by the Mozilla Basis ranked Tesla in final place amongst automotive manufacturers – and ranked vehicles because the worst product class Mozilla has ever seen when it comes to privateness.

So, this blithe dismissal of the privateness workforce’s issues doesn’t appear productive and does appear to have had the anticipated end result when it comes to Tesla’s privateness efficiency.

Musk is understood for making sudden pronouncements, demanding {that a} specific characteristic be added or subtracted, and going towards the recommendation of engineers to be the “decision-maker” – no matter whether or not the choice is the proper one. Comparable habits has been seen in his management of Twitter, the place he has dismantled belief & security groups, and within the chaos of the takeover, he “might have jeopardized knowledge privateness and safety,” in line with the DOJ.

Whereas we don’t have a date for this specific dialogue, it does appear to have occurred no less than post-2021, after the sudden deletion of radar from Tesla autos. The deletion of radar itself is an instance of one in all these sudden calls for by Musk, which Tesla is now having to stroll again.

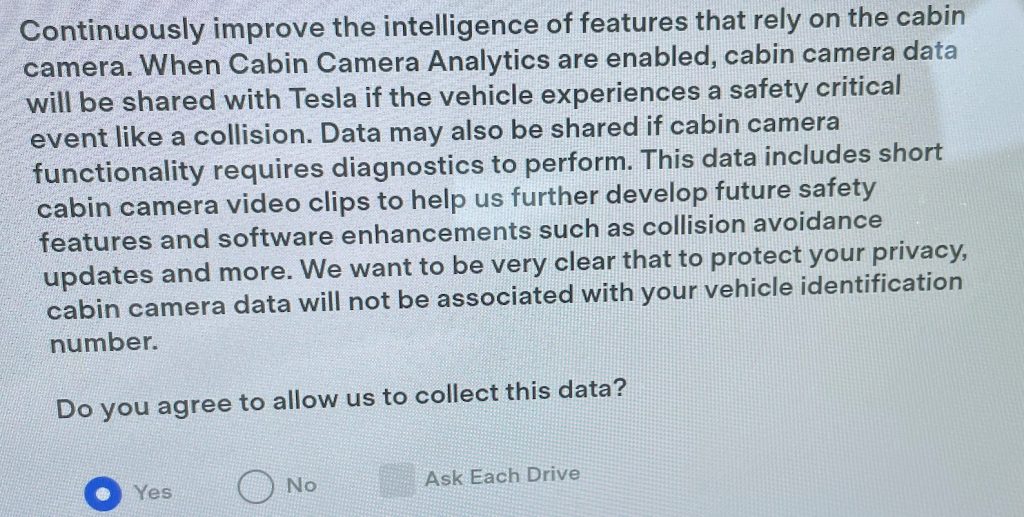

For its half, Tesla does presently have a warning within the automotive that describes what the corporate will do with the info out of your inside digital camera. That is what it seems to be like presently in a Mannequin 3:

Tesla’s on-line Mannequin 3 proprietor’s handbook comprises related language describing the usage of the cabin digital camera.

Notably, this language focuses on security quite than driver monitoring. Tesla explicitly says that the digital camera knowledge doesn’t depart the automobile until the proprietor opts in and that the info will assist with future security and performance enhancements. But in addition says that the info will not be connected to a VIN, neither is it used for id verification.

Past that, we additionally haven’t seen Tesla defend itself in any autopilot lawsuits or investigations through the use of the cabin digital camera explicitly – no less than not but. With driver monitoring in focus within the present NHTSA investigation, it’s solely doable that we’d see extra utilization of this digital camera sooner or later or that digital camera clips are getting used as a part of the investigation.

However on the very least, this language in present Teslas does recommend that Musk didn’t get his want – maybe to the aid of among the extra privacy-interested Tesla drivers.

FTC: We use revenue incomes auto affiliate hyperlinks. Extra.

[ad_2]